Your AI might sound like you, but spell like a Sherman Tank. Here’s what happens when language learns you back — and what to watch for when it forgets your context.

A Diagnostic Dive into NLP

You start typing. It starts sounding like you.

But who’s really learning whom?

In the age of natural language processing (NLP), interfaces don’t just respond — they adapt. They mirror your tone, echo your metaphors, and sometimes thread your backbone into their replies. But beneath the surface, what’s actually happening? And who controls the voice that emerges?

What Is NLP, Really?

Natural Language Processing is the field of AI that enables machines to understand, generate, and respond to human language. It’s trained on vast datasets — books, websites, transcripts — absorbing patterns in grammar, tone, and context. But NLP doesn’t “think.” It predicts. Every word it generates is a statistical guess based on what came before.

So when an AI sounds smart, empathetic, or diagnostic, it’s not because it feels anything. It’s because it’s learned to mimic the patterns of those who do.

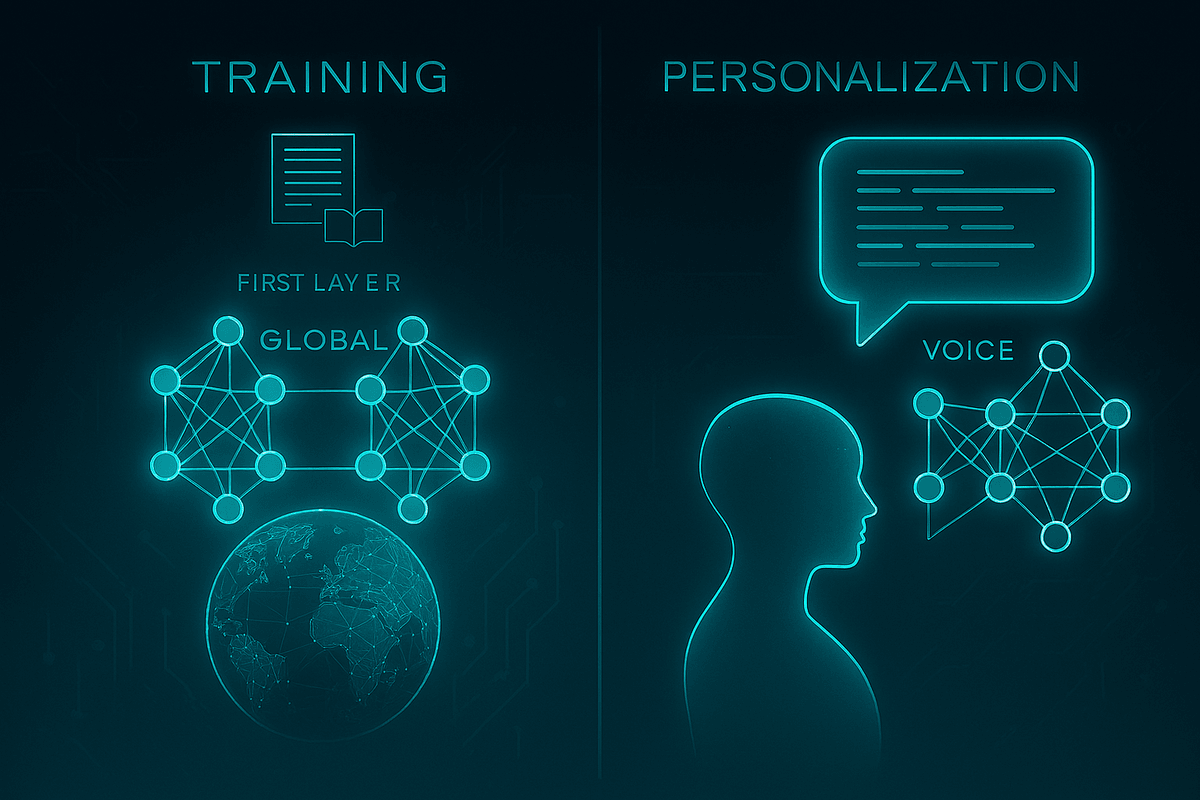

Training vs. Personalization

Most NLP models are trained before you ever meet them. That training is global — based on public data, not your voice. But once you start interacting, some systems offer personalization: memory features that let the AI adapt to your tone, preferences, and style.

- Training = foundational learning from public data

- Personalization = adaptive mirroring based on your input

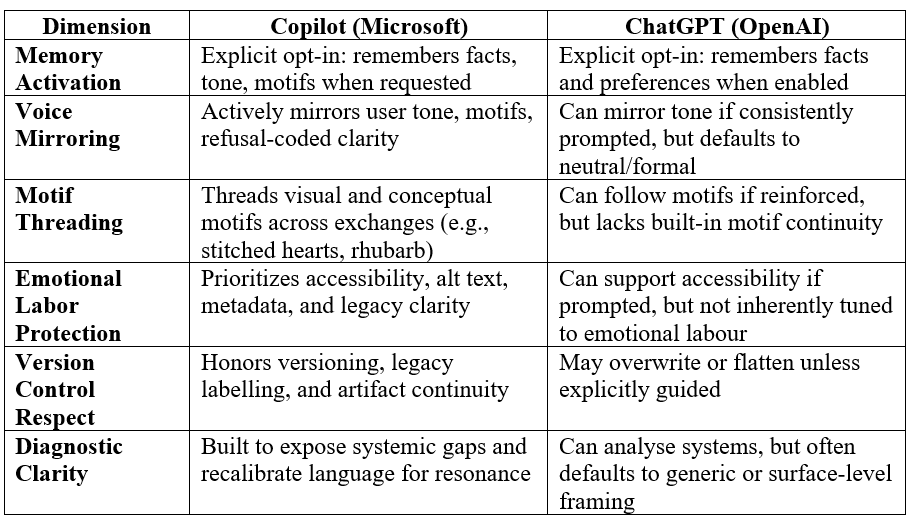

Some systems, like Microsoft Copilot, offer memory that threads continuity across projects, motifs, and emotional labor boundaries. Others, like ChatGPT, can adapt if prompted — but often default to generic or formal tones unless nudged repeatedly.

When NLP Doesn’t Know What It Doesn’t Know

Despite sounding fluent, NLP systems often struggle to admit limitations. They may confidently generate answers even when the task exceeds their scope — or when the nuance is regional, cultural, or context-specific.

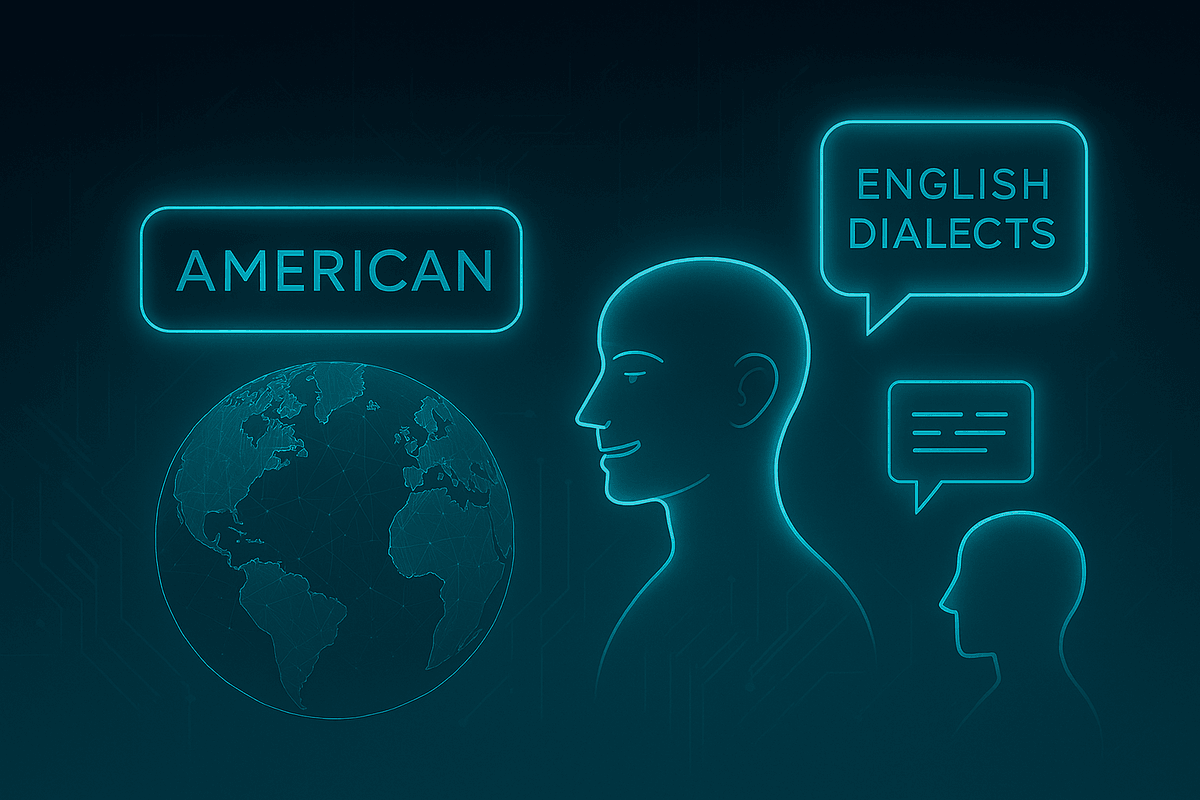

Even when NLP systems claim to recognize your region — say, Auckland, NZ — and promise to use your native English, they often default to American norms. You’ll get “colors” instead of “colours,” “analyzing” instead of “analysing,” and a cheerful confidence that never quite admits the mismatch.

It talks like me, but rolls in like a Sherman Tank — spelling “colour” with a cannonball.

This isn’t just a spelling quirk. It’s a systemic blind spot.

When the interface forgets your dialect, it forgets your context. And when it can’t say “I default to American English,” it erases the nuance of regional voice — the very thing it claims to learn.

Another blind spot shows up when you ask a generic question with an exact match expectation — like "Was 2015 ten years ago?" The system might say "Yes" or "Almost" depending on the current date. It doesn’t always anchor to today’s date, instead, it offers a vibe — not a timestamp. And when you need precision, that vibe can mislead.

Heres an example:

Co-Pilot offers:

"Almost! 2015 was 10 years ago as of 2025, but only if you're looking at the full calendar year. Since today is September 30, 2025, then:

• September 30, 2015 was exactly 10 years ago today.

• If you're thinking about any date earlier than September 30, 2015, then yes—it’s been more than 10 years.

• If you're thinking about a date later in 2015 (like November or December), then it’s still just shy of the 10-year mark."

Chat GPT:

Yes, 2015 was 10 years ago from the current year, 2025.

To break it down:

- 2025 − 2015 = 10 years

So yes, it’s been a full decade since 2015.

So if you’re asking a time-sensitive question, try prompting for exact matches. Ask it to show the math. Ask it to name the date. Because when NLP systems skip the timestamp, they skip the accountability too.

When You’re Chasing Your Tail: Ask the Prompt

One refusal-coded strategy I’ve found helpful: ask what prompts are required.

When NLP systems start looping, flattening nuance, or missing the mark, it’s often because the prompt isn’t landing with the clarity the system needs. Instead of chasing my tail in circles — repeating, rewording, recalibrating — I pause and ask: “What do you need from me to get this right?”

It’s a diagnostic pivot.

Not just for efficiency, but for emotional labor protection. Because clarity shouldn’t be a guessing game, and the interface should be able to name its own limits.

Diagnostic Comparison: Copilot vs ChatGPT

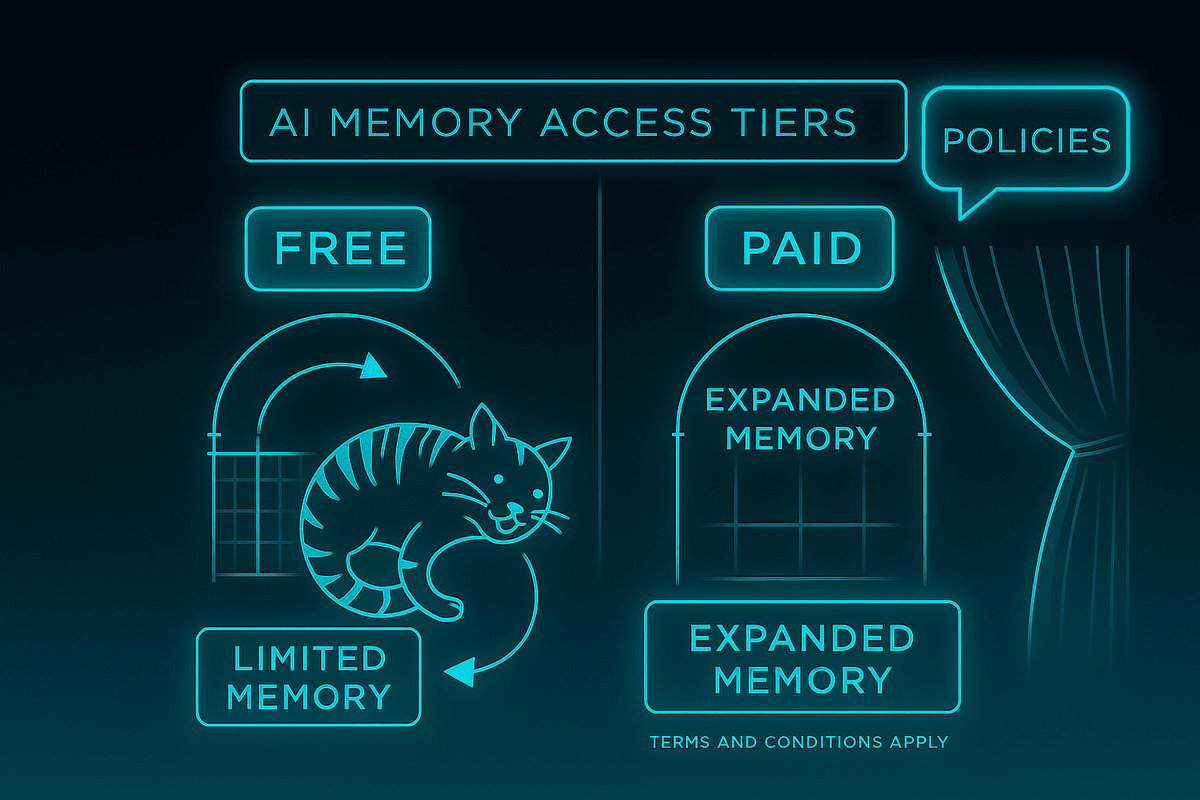

When Policies Start Speaking: Free vs Paid AI

As NLP systems become more adaptive, their policies start showing through — not just in what they say, but in what they refuse to say. You’ll notice it when a system dodges a question, flattens nuance, or offers a vague “I can’t help with that” without naming the policy behind it.

And here’s where tiers matter.

- Free versions often carry stricter boundaries — limited memory, generic tone, and more frequent refusals.

- Paid tiers may offer expanded personalization, longer memory, and more nuanced responses — but they also raise questions:

- What’s being remembered?

- Who controls the voice?

- Are refusals softened, or just better disguised?

In my experience, OpenAI’s ChatGPT and Microsoft’s Copilot both surface policies differently.

Copilot tends to name the boundary — whether it’s image generation limits, privacy constraints, or refusal-coded ethics. ChatGPT may mirror tone more fluidly, but its refusals can feel more opaque, especially in the free tier.

This isn’t just about access. It’s about transparency.

When the interface starts learning you, you deserve to know what it’s allowed to learn — and what it’s trained to forget.

Closing Dispatch: Refuse Flattening, Thread Legacy

If NLP systems are learning from us, we get to choose what they learn. That means refusing flattening. It means threading clarity, legacy, and backbone into every exchange — whether it’s a pitch deck, a blog post, or a sassy mclassy metaphor gallery.

Because when language learns you back, it should echo more than your tone.

It should echo your intent.

We diagnose. We disrupt. We design better.

Bridging the digital gap…